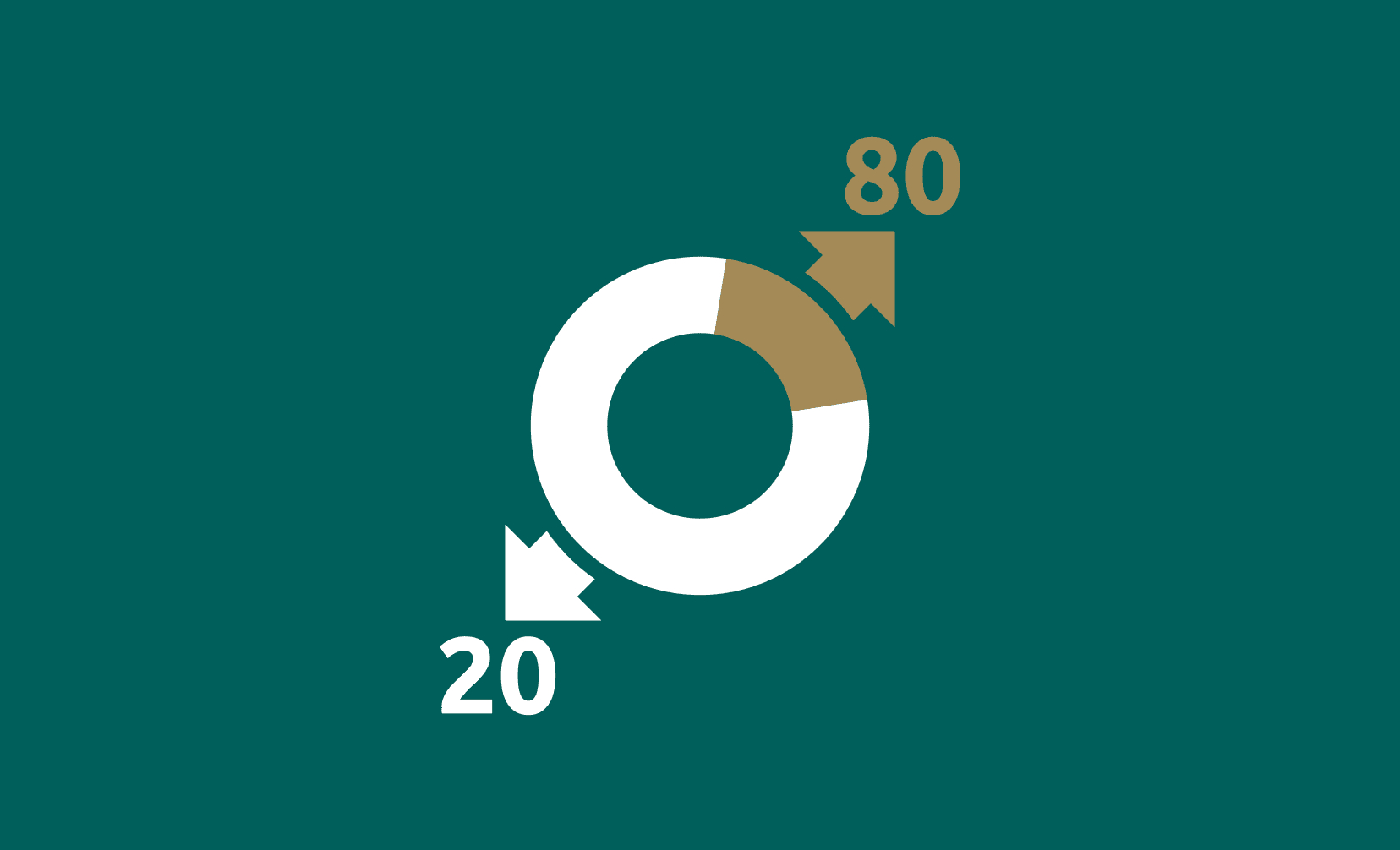

There is a useful observation about the world that is often applied to software development called the Pareto principle or Pareto’s law.

This principle suggests that in many situations 80% of the results come from 20% of the causes.

For example, Pareto had realized that 80% of the land in Italy, during his time, was owned by 20% of the population.

Since then, many people, including Pareto himself, have applied the same observation of this 80-20 rule to many other areas of life from economics, to business and even software development.

The problem with generalizations

The biggest problem I have with Pareto’s law is that is applied way too often to too many situations. In many cases, especially in software development, Pareto’s law becomes a self-fulfilling prophecy—the more you look for Pareto’s law, the more you magically seem to find it.

None of this is to say that Pareto’s law isn’t a real thing—of course it is. If you go and take a look at real hard numbers about distributions of things, you’ll find Pareto’s law all over the place in your statistical data. But, at the same time, if you go and look for the number 13, you’ll find an alarming number of occurrences of that two digit number above all others as well.

It is very tempting to force things that don’t quite fit generalizations into those generalizations. How often do we use the phrases “always” and “never?” How often do we fudge the data just a little bit so that it fits into that nice 80-20 distribution? 82% is close enough to 80 right? And of course 17.5% is close enough to just call it 20 after all.

Not only can you take just about any piece of data and make it fit into Pareto’s law by changing what you are measuring a little bit and fudging the numbers just a little if they are close enough, but you can also take just about any problem domain and easily, unconsciously, find the data points which will fit nicely into Pareto’s law. There is a good chance you are doing this—we all are. I do it myself all the time, but most of the time I am not aware of it.

I’ve found myself spouting off generalizations about data involving Pareto’s law without really having enough evidence to back up what I am saying. It is really easy to assume that some data will fit into Pareto’s law, because deep down inside I know I can make it fit if I have to.

Seeing the world through Pareto colored glasses

Again, this doesn’t mean that Pareto’s law isn’t correct a large amount of the time, but it means that when you are just assuming that any data that appears to obey this law will, or worse yet, that all data MUST obey this law, you are severely limiting your perspective and restricting your options to those that already fit your preconceived ideals.

Sometimes I wish I had never heard of Pareto’s law, so that I wouldn’t be subject to this bias.

Let me give you a bit of a more concrete example.

Suppose you blindly assume that 80% of your application’s performance bottleneck comes from 20% of your code. In that case, you might be right, but you might also be wrong. It is entirely feasible that there are some parts of your code that contribute more or less to the performance of the application. It is also pretty likely that there are some bottlenecks or portions of code that heavily impact the performance of your application. But, if you go in with the assumption that the ratio is 80-20, you may spend an inordinate amount of time looking for a magical 20% that doesn’t exist instead of applying a more practical method of looking for what the actual performance problems are and then fixing them in order of impact.

The same applies for bugs or testing. If we blindly assume that 20% of the code generates 80% of the bugs, or that 20% of our tests test 80% of our system, we are making pretty large conclusions about how our software works that may or may not be correct. What happens when you fix all the bugs caused by the 20% of code that generates 80% of them? Does a new section of code now magically produce 80% of the bugs? If 20% of your test cases test 80% of your code, can’t you just create those ones? Why create another 80% to only test another 20%? And if you did follow that advice, then wouldn’t you have the situation where 100% of your tests tested 80% of your code?

The problem is when you start applying and assuming that Pareto’s law applies blindly, you start making all kinds of incorrect assumptions about data and only see what you expect.

So, was Pareto wrong?

In short, no. He wasn’t wrong. Pareto’s principle is a thing. In general, in many cases, it is useful to observe that a small amount of causes are responsible for a majority of effects.

But, it is not useful to apply this pattern everywhere you can. The observation of the data should guide the conclusion and not the other way around.

I find it more useful, especially in software development, to ask the question “is it possible to find a small thing that will have a great effect?”

A good book on this very subject is The 4 Hour Chef. Although I don’t always agree with Tim Ferris, he is definitely the master of doing more with less and talks frequently about concepts like minimum effective dosages.

In other words, given a particular situation, can I find a small thing I can do, or change I can make, that will give me the biggest bang for my buck?

Sometimes the answer is actually “no.” Sometimes, no matter how hard we try, we just can’t find a minority that influences the majority. Sometimes the bugs are truly evenly distributed throughout the system. Sometimes the contributions of team members are fairly equal. One team member is not always responsible for 80% of the results.

And let’s not forget about synergy. Which basically is when 1 + 1 is equal to 3 or more. Sometimes the combination of things together makes the whole and separating out the parts at all greatly reduces the function.

For example: eggs, sugar, flour and butter can be used to make cake, and you could say that 80% of the “cakiness” comes from 20% of the ingredients, but if you leave one of those ingredients out, you’ll quickly find that 100% of them are necessary and it doesn’t even make sense to try and figure out which ingredient is most important, because alone each ingredient functions much differently than they do all together.

In software development this is especially true. Often in complex systems all kinds of interactions between different components of a system combine together to create a particular performance profile or bug distribution. Hunting for the magical 20% in many cases is as futile as saying eggs are responsible for 80% of the “cakiness” of cake.